The IRCAM research professor Gérard Assayag has been awarded a 2019 ERC Advanced Grant for the REACH project (Raising co-creativity in cyber-human Musicianship) which enables him to continue his work on cyber-human musical co-creation. He founded the Musical Representations team at the Science and Technology of Music and Sound Laboratory(STMS — CNRS/IRCAM/Culture and Communication Ministry/Sorbonne University) and models creative musical intelligence. Let’s meet this researcher who talk about his work with passion.

Gérard Assayag’s approach is part of a research-creation strategy which is close to the artistic process. In his research work, he makes the two fields of music and science interact and thus helps both fields progress.

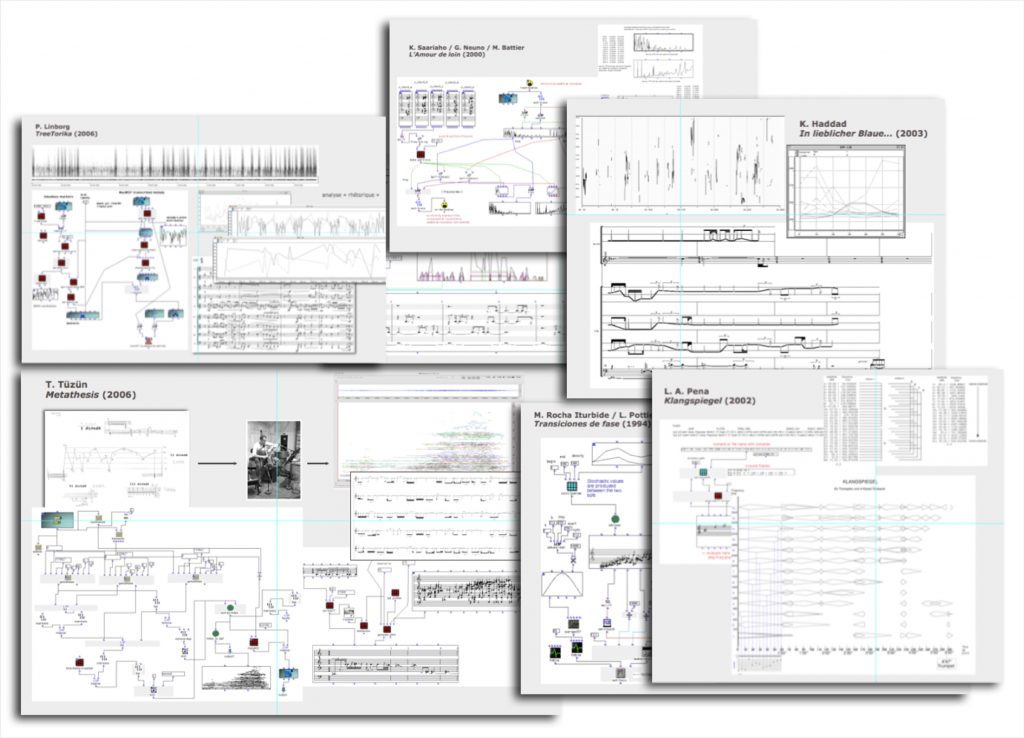

The IRCAM is a place which is unique worldwide as it brings artists and scientists together in a common approach to knowledge and experimentation. This research professor began his career there as a researcher working on a project that would gain international recognition over the years. He and his team designed the computer-assisted composition software, OpenMusic – software featuring a visual programming language which is easily accessible and widely used by musicians and musicologists while also possessing an educational dimension. « It is a creative programme which generates new artistic ideas to test and try them out. As a result of this project, we started to look at direct forms of interaction between computers and musicians, » explains the researcher.

How can musical ideas be developed jointly by a musician and a machine through a sound communication channel? How can a machine interact « creatively » with a musician? These questions raise new research issues involving artificial creativity. This was recently an object of a study inspired by the human creative process which focused on understanding past and present situations and anticipating future situations. Improvisation serves as a borderline case which is complex to model because it brings together the richest and most optimal characteristics of human creativity. In extremely short times (a few tens of milliseconds) improvisation produces highly structured messages in response to an evolving sonic environment and exhibits action strategies on several temporal scales.

Musical improvisation is thus a key theme for the RepMus team at STMS directed by Gérard Assayag. This has led to numerous international collaborations with great musicians in the field (like Bernard Lubat, Steve Coleman, Roscoe Mitchell, Mike Garson, George Lewis, Evan Parker, Steve Lehman and many others). Gérard Assayag’s team have created computer programmes linked to work on ‘Musical Information Dynamics‘ (an application of information theory to music). These include Omax, which models certain improvisation processes and is capable of dialoguing with a musician on stage, and SoMax, which implements a cognitive model of creative and contextually reactive musical memory.

Gérard Assayag is a pioneer in this field and has notably created the OMAX software with his team which is used by many musicians, for example at a concert in New York with Bernard Lubat (Piano) and Gérard Assayag using the Omax programme. There have been several National Research Agency projects on this subject (IMPROTECH, SOR2, DYCI2, MERCI).

« Embodiment represents a sensitive form of contact with digital technology which truly creates a mixed form of reality for a musician. »Gérard Assayag, IRCAM research professor

Now, the research professor is summarising all this work and implementing new perspectives thanks to this ERC Advanced Grant for the REACH project. His objective is to highlight co-creativity in cyber-human musical interactions.

« This is a sort of artificial musical intelligence, artificial musicality (« machine musicianship »). The term cyber-human expresses the continuity between human cognition and digital virtuality in the same way as cyber-physics expressed continuity between the digital and physical spheres. Here, the continuity involves creativity thanks to cyber-human co-action, » Gérard Assayag explains. « Improvised musical interactions summarise many situations from everyday life in the same way as we’re improvising our speech in this conversation, » he adds.

But how can a programme generate music that humans can understand? For this, computing is involved through modelling, AI techniques and automatic learning. Today’s research aims to understand more about the « symbiotic interactions » between humans and machines facilitated by enhanced captures of physical and human signal and also learning. This paves the way for new forms of mixed reality to achieve embodiment (physical involvement) and co-creation.

A phase of field observation and experimentation is required to bring this co-creation into play and better understand creative intelligence. In this context of improvised practices, concerts are well suited to this type of experiment. From a methodological point of view, the ERC REACH project therefore aims to create dual convergent movement – from digital to human (improving artificial agents’ analysis and production capacities) and from human to digital (enhancing a subject’s involvement in the hybrid experience through embodiment). The aim of this is to produce an immersive and embodied symbiotic experience. « This is a chiasmus: we hope that the two research streams will intersect in the middle to produce new objects, » summarises the researcher about his ERC grant project.

This project therefore has a solid interdisciplinary base in the image of IRCAM which is hosting the project. Gérard Assayag’s ERC project combines computer science, music, cognitive sciences, anthropology and sociology through his long-standing collaboration with Marc Chemillier at the École des Hautes Études en Sciences Sociales. Collaboration initiatives with industry are being set up like Hyvibe, an STMS start-up that works on inventions of acoustic instruments which are augmented by numerous sensors like the « Smart Guitar ». An international network is also being set up involving several academic actors such as the University of Tokyo, the EHESS and the IRCAM. Finally, each year the major ImproTech event is organised linking scientific workshops and a music festival. The ERC grant will enable this type of international event to continue.

Research into computational creativity involves Artificial Intelligence techniques like statistical learning, optimisation and deep learning. However this work also brings up philosophical questions such as whether we can really talk about creativity with regard to a machine? « The very nature of the question lies in the fact that the machine is not a subject in itself which means we think more in terms of interaction and relationships. In the past, we used statistical learning, namely observing what humans do over time to obtain models of sequences (forms, patterns). Now, the new AI techniques like generative learning of representations mean we are faced with large-scale problems. This is because music produces multivariate signals (several simultaneous independent and coherent dimensions) that need to be modelled at various structural or semantic levels, » says Gérard Assayag about the challenges facing his project.

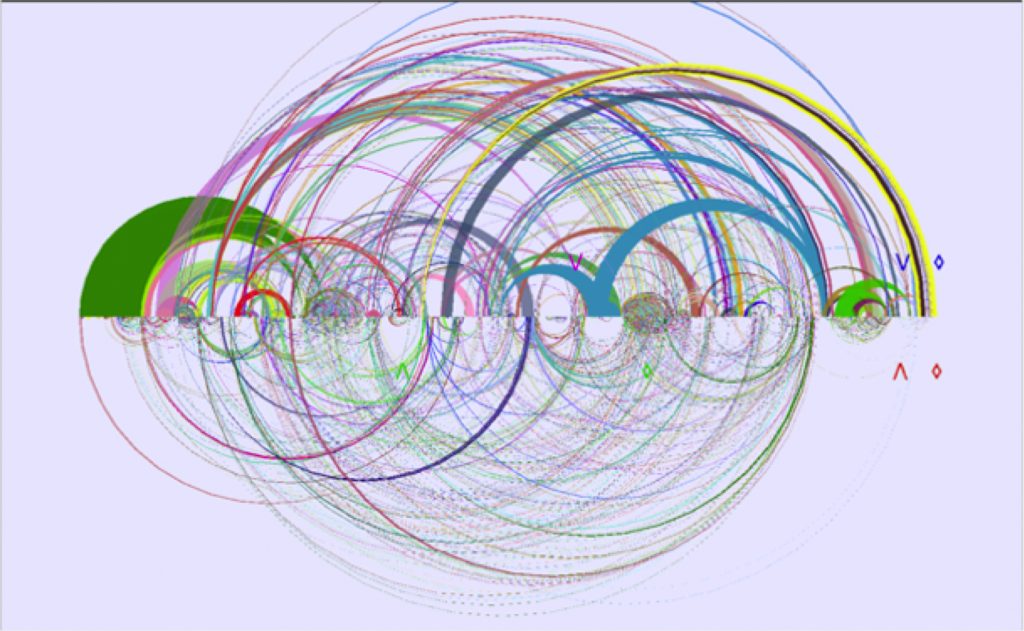

« When we create interactions between complex systems like humans and machines, we do not just observe a simple addition of behaviours. Instead we focus on the ’emergence’ of concomitant and coherent forms of behaviour that cannot be simply explained by their separate components, » the researcher explains. These emergence processes bring forth new musical forms in a non-linear way that are not easy to predict and this is what we call co-creation.

« In some ways, humans learn from machines as well. Humans may change their musical tactics according to what the machine is feeding back to it but in return machines will continue to learn from human reactions. This forms a cross-learning loop with feedback and brings up reinforcement mechanisms which are well known in AI. This complexity is what we call co-creativity which is what we study. »Gérard Assayag, IRCAM research professor.

A demonstration for the ‘Neurones, les intelligences simulées’ exhibition (Neurones, simulated intelligence) in the framework of Mutations/Créations 4 at the Georges Pompidou Centre, February 26th — April 27th 2020, illustrating autonomous creative agents which come from Gérard Assayag’s team, one of the base elements of ERC REACH project.

The work of Gérard Assayag and his team could make it possible to better decipher our creative behaviour, particularly the way in which knowledge, intuition, acts of will, communication and poiesis (the action of doing and creating) fit together in co-action situations, whether this involves humans, machines or both.

Technically, AI is limited by its learning mode in this respect. It looks for regularity in patterns when it learns and analyses data, and therefore seeks stereotypes which are the opposite of creativity! This is a major epistemological problem that needs to be taken into account. « The challenge is to reproduce human know-how and therefore its creative dimension through new computational strategies to achieve less stereotyped AI learning, » Gérard Assayag sums up.