On Monday 25 November, at 19:00 (GMT +1) Marco Fiorini will lead an Online Max Meetup for Notam, the Norwegian centre for technology, art and music. The session will present Somax2 and discuss Music Improvisation and Composition with Co-Creative Agents, showcasing concrete research and artistic outcomes of the REACH project to the online Max community. […]

Auteur/autrice : reachedit

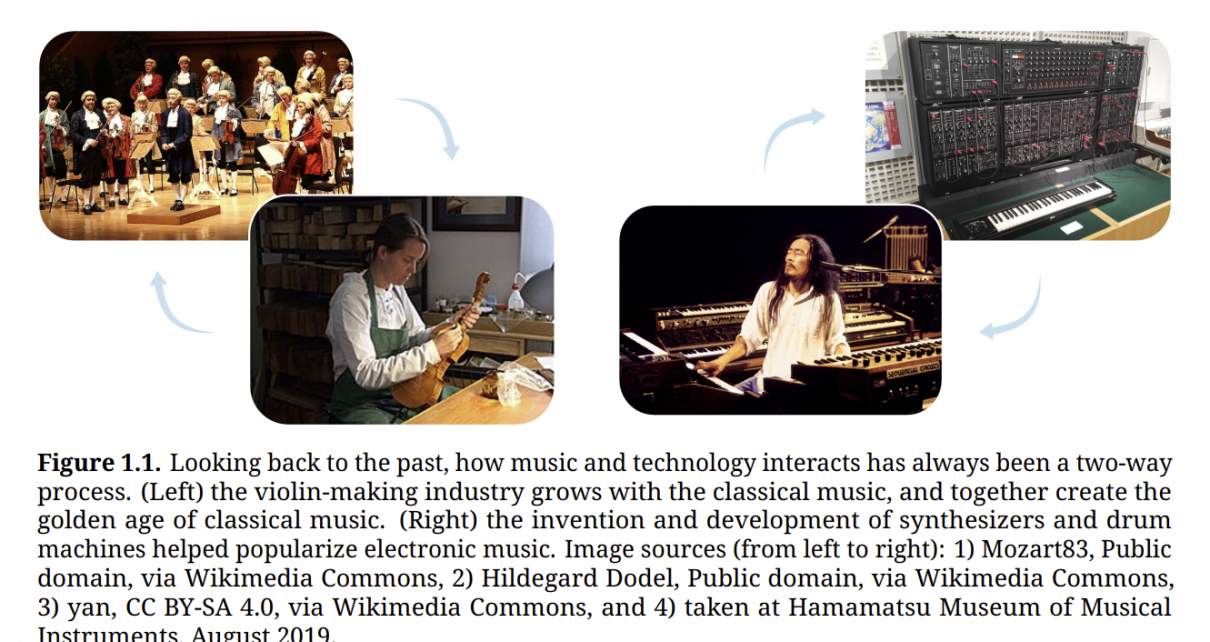

Generative AI for Music and Audio

By Hao-Wen Dong. Sound [cs.SD]. University of California San Diego, 2024. English. Read full publication. Abstract: Generative AI has been transforming the way we interact with technology and consume content. In the next decade, AI technology will reshape how we create audio content in various media, including music, theater, films, games, podcasts, and short videos. […]

Cyber-improvisations et cocréativité, quand le jazz joue avec les machines

Gérard Assayag, Marc Chemillier, Bernard Lubat 2023, pp.38-39. Read full article. Abstract: Pouvez-vous nous raconter votre rencontre et votre envie de travailler ensemble ? Marc Chemillier : Avec Gérard Assayag, nous avons commencé à concevoir des logiciels pour faire de l’improvisation musicale au début des années 2000. Gérard travaillait sur la simulation stylistique et explorait […]

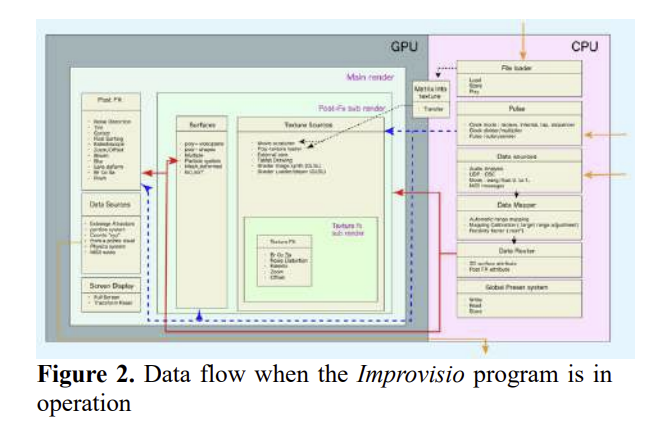

Improvisio : towards a visual music improvisation tool for musicians in a cyber-human co-creation context

BySabina Covarrubias Stms. Journées d’informatique musicale, Micael Antunes; Jonathan Bell; Javier Elipe Gimeno; Mylène Gioffredo; Charles de Paiva Santana; Vincent Tiffon, May 2024, Marseille, France. Read full publication. Abstract: Improvisio is a software for musicians who want to improvise visual music. Its development is part of the REACH project. It is useful to create visual […]

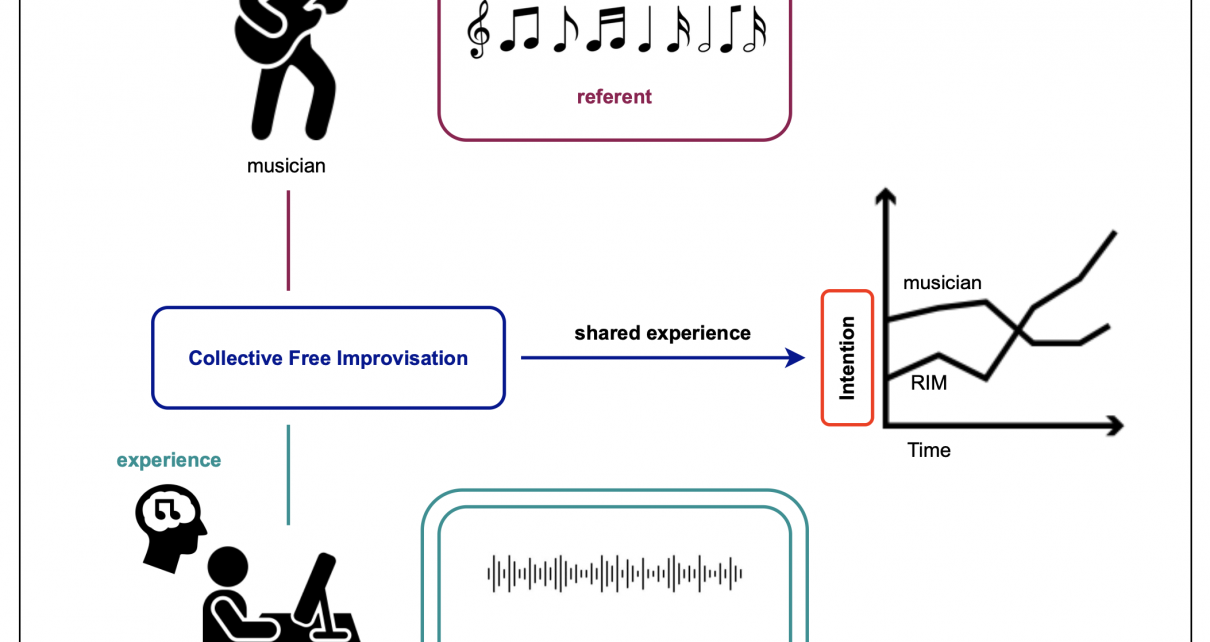

Being the Artificial Player: Good Practices in Collective Human-Machine Music Improvisation

Article by Marco Fiorini (STMS – IRCAM, Sorbonne Université, CNRS) has been accepted for the 13th EAI International Conference: ArtsIT, Interactivity & Game Creation at New York University in Abu Dhabi, United Arab Emirates Read the full paper Abstract: This essay explores the use of generative AI systems in cocreativity within musical improvisation, offering best practices for […]

Preparing the Boulez Somax2 IRCAM variations for concert at Carnegie Hall with ICE

Levy Lorenzo from the International Contemporary Ensamble is in studio at IRCAM with Marco Fiorini, Gérard Assayag and George Lewis to work on preliminary Somax2 tests for the upcoming concert homage at Pierre Boulez that will take place at Carnegie Hall in New York on 30 January 2025, with the world premiere of Boulez Somax2 […]

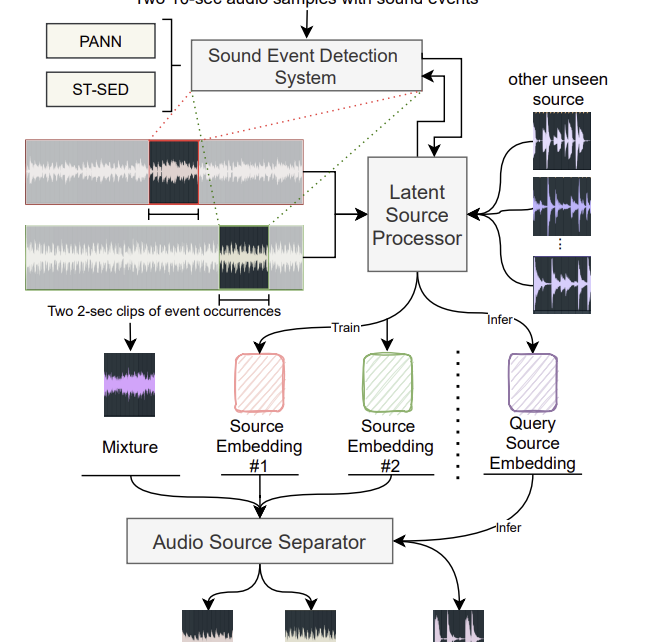

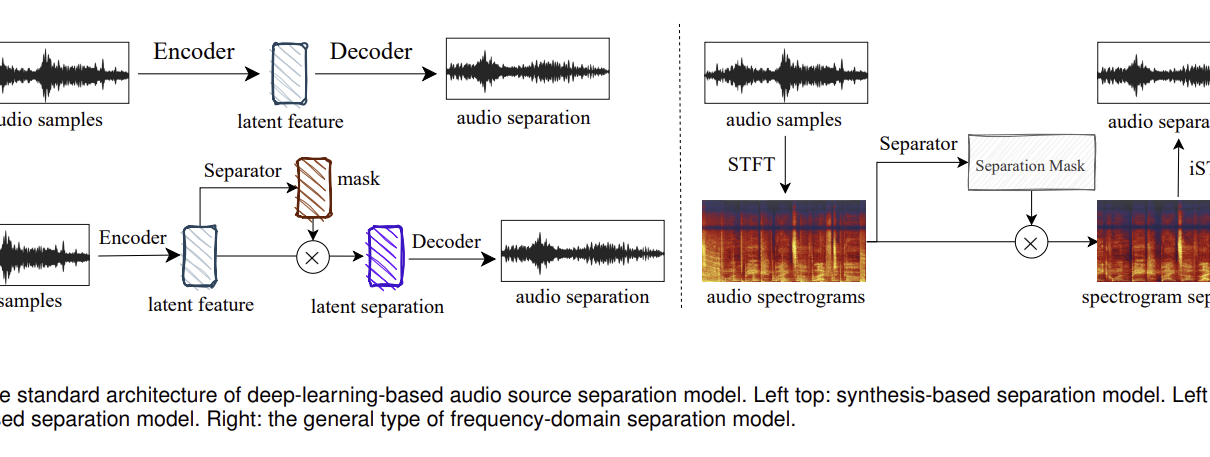

Zero-Shot Audio Source Separation through Query-Based Learning from Weakly-Labeled Data

Ke Chen, Xingjian Du, Bilei Zhu, Zejun Ma, Taylor Berg-Kirkpatrick, Shlomo Dubnov Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Remote Conference, France. pp.4441-4449. Read full publication. Abstract: Deep learning techniques for separating audio into different sound sources face several challenges. Standard architectures require training separate models for different types of audio sources. Although […]

Computational Auditory Scene Analysis with Weakly Labelled Data

By Qiuqiang Kong, Ke Chen, Haohe Liu, Xingjian Du, Taylor Berg-Kirkpatrick,Shlomo Dubnov, Mark D Plumbley. Read full publication. Abstract: Universal source separation (USS) is a fundamental research task for computational auditory scene analysis, which aims to separate mono recordings into individual source tracks. There are three potential challenges awaiting the solution to the audio source […]