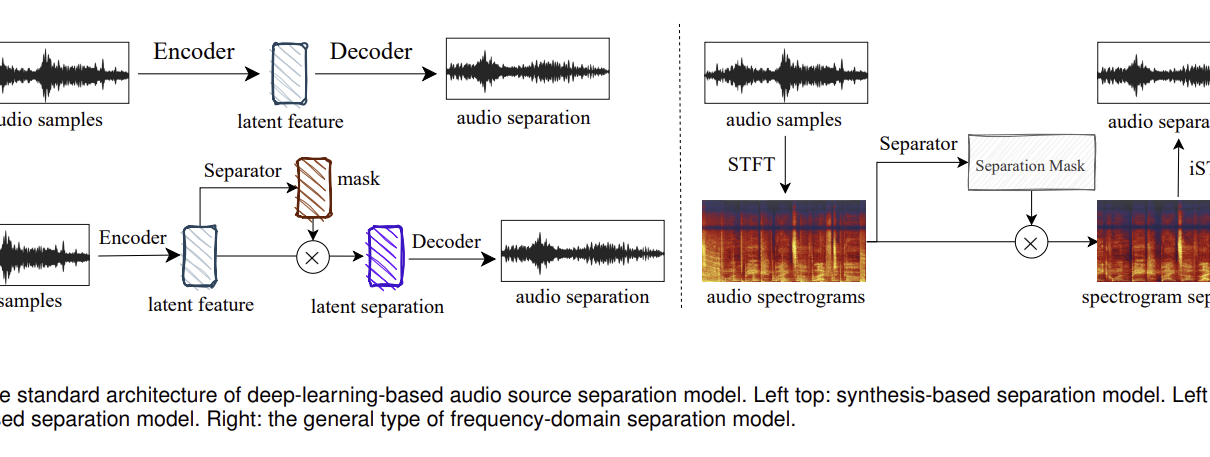

Article by Marco Fiorini (STMS – IRCAM, Sorbonne Université, CNRS) has been accepted for the 13th EAI International Conference: ArtsIT, Interactivity & Game Creation at New York University in Abu Dhabi, United Arab Emirates Read the full paper Abstract: This essay explores the use of generative AI systems in cocreativity within musical improvisation, offering best practices for […]

Research

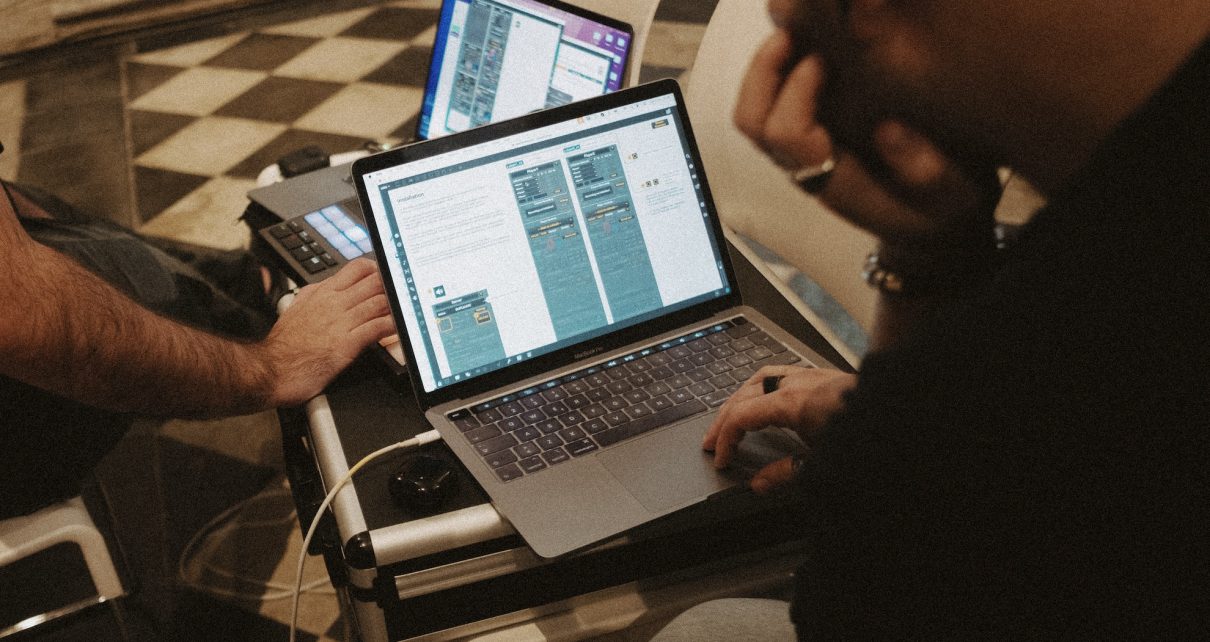

Preparing the Boulez Somax2 IRCAM variations for concert at Carnegie Hall with ICE

Levy Lorenzo from the International Contemporary Ensamble is in studio at IRCAM with Marco Fiorini, Gérard Assayag and George Lewis to work on preliminary Somax2 tests for the upcoming concert homage at Pierre Boulez that will take place at Carnegie Hall in New York on 30 January 2025, with the world premiere of Boulez Somax2 […]

Zero-Shot Audio Source Separation through Query-Based Learning from Weakly-Labeled Data

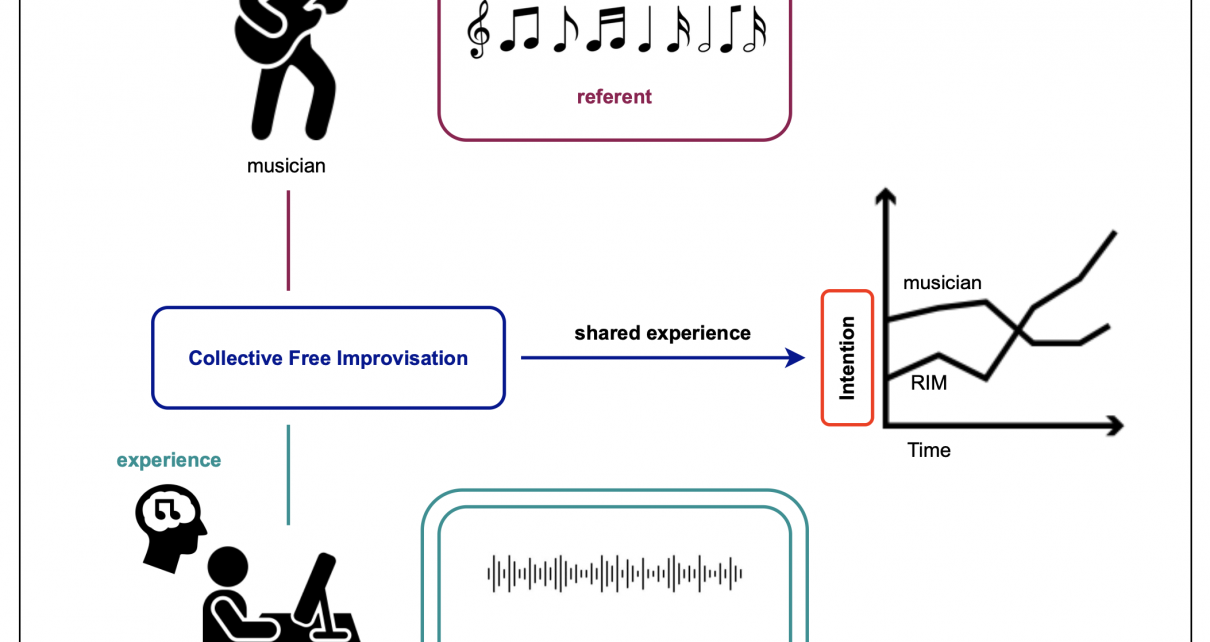

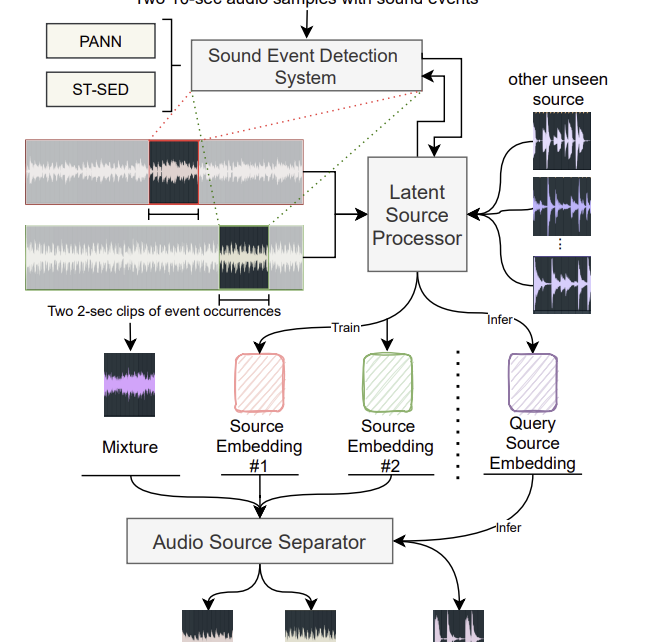

Ke Chen, Xingjian Du, Bilei Zhu, Zejun Ma, Taylor Berg-Kirkpatrick, Shlomo Dubnov Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Remote Conference, France. pp.4441-4449. Read full publication. Abstract: Deep learning techniques for separating audio into different sound sources face several challenges. Standard architectures require training separate models for different types of audio sources. Although […]

Computational Auditory Scene Analysis with Weakly Labelled Data

By Qiuqiang Kong, Ke Chen, Haohe Liu, Xingjian Du, Taylor Berg-Kirkpatrick,Shlomo Dubnov, Mark D Plumbley. Read full publication. Abstract: Universal source separation (USS) is a fundamental research task for computational auditory scene analysis, which aims to separate mono recordings into individual source tracks. There are three potential challenges awaiting the solution to the audio source […]

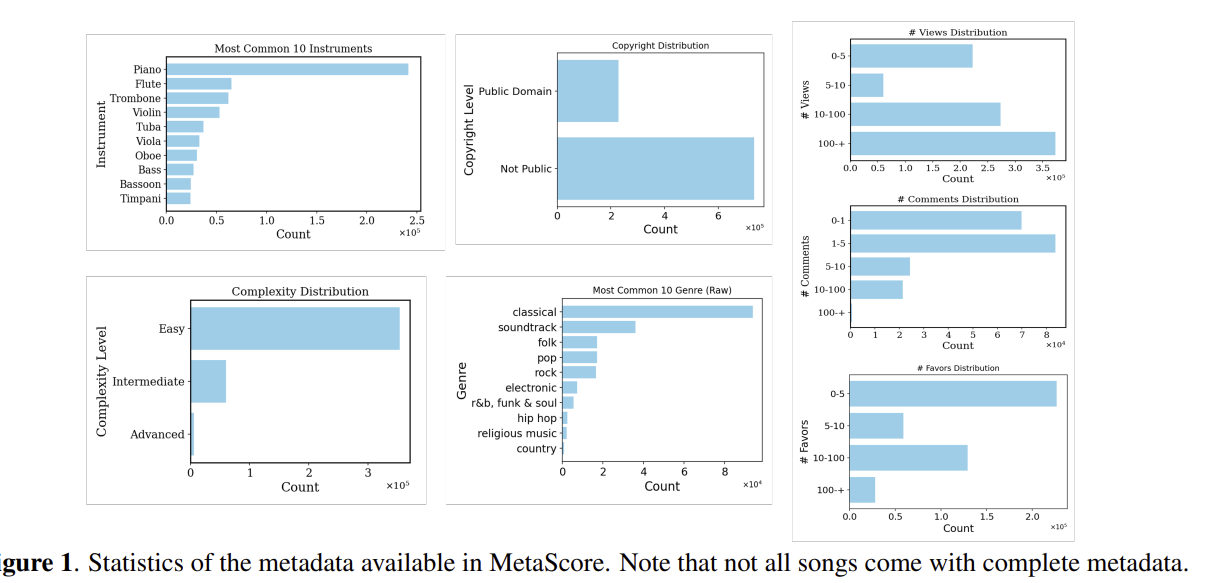

A New Dataset for Tag- and Text-based Controllable Symbolic Music Generation

By Weihan Xu, Julian McAuley, Taylor Berg-Kirkpatrick, Shlomo Dubnov,Hao-Wen Dong ISMIR Late-Breaking Demos, Nov 2024, San Francisco, United States Read full publication. Abstract: Recent years have seen many audio-domain text-to-music generation models that rely on large amounts of text-audio pairs for training. However, similar attempts for symbolic-domain controllable music generation has been hindered due to […]

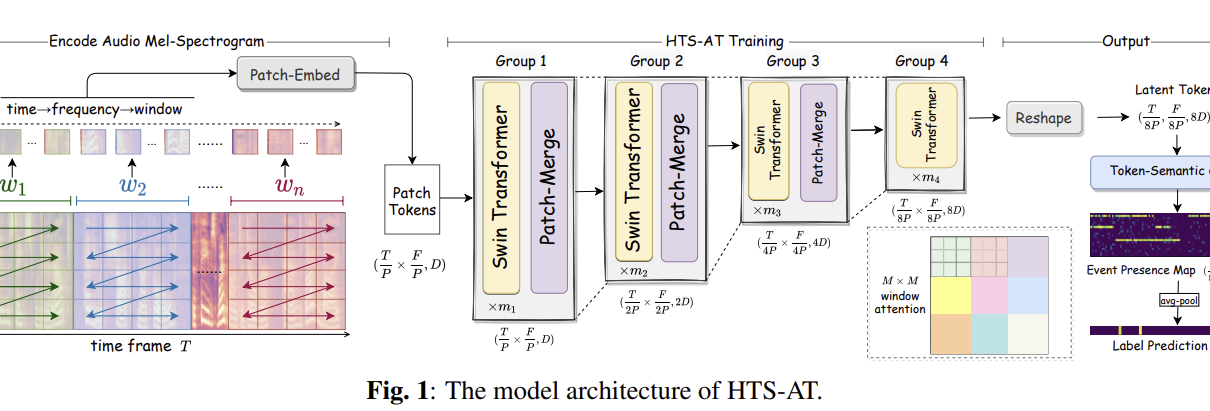

HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection

Ke Chen, Xingjian Du, Bilei Zhu, Zejun Ma, Taylor Berg-Kirkpatrick, Shlomo Dubnov ICASSP 2022 – 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), May 2022, Singapore, France. pp.646-650 Read full publication. Abstract: Audio classification is an important task of mapping audio samples into their corresponding labels. Recently, the transformer model with self-attention […]

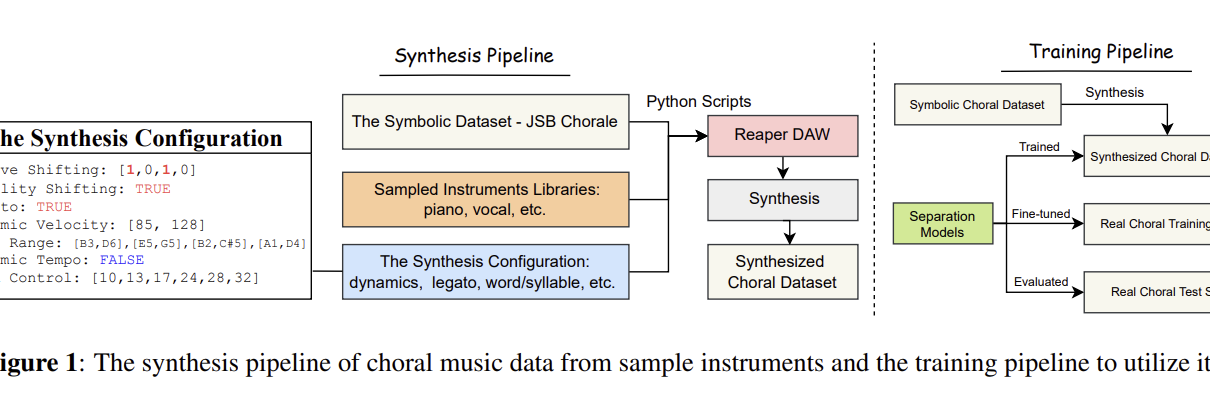

Improving Choral Music Separation through Expressive Synthesized Data from Sampled Instruments

Ke Chen, Hao-Wen Dong, Yi Luo, Julian Mcauley, Taylor Berg-Kirkpatrick, Miller Puckette, Shlomo Dubnov Proceedings of the 23rd International Society for Music Information Retrieval Conference, Dec 2022, Bengaluru, India. Read full publication. Abstract: Choral music separation refers to the task of extracting tracks of voice parts (e.g., soprano, alto, tenor, and bass) from mixed audio. […]

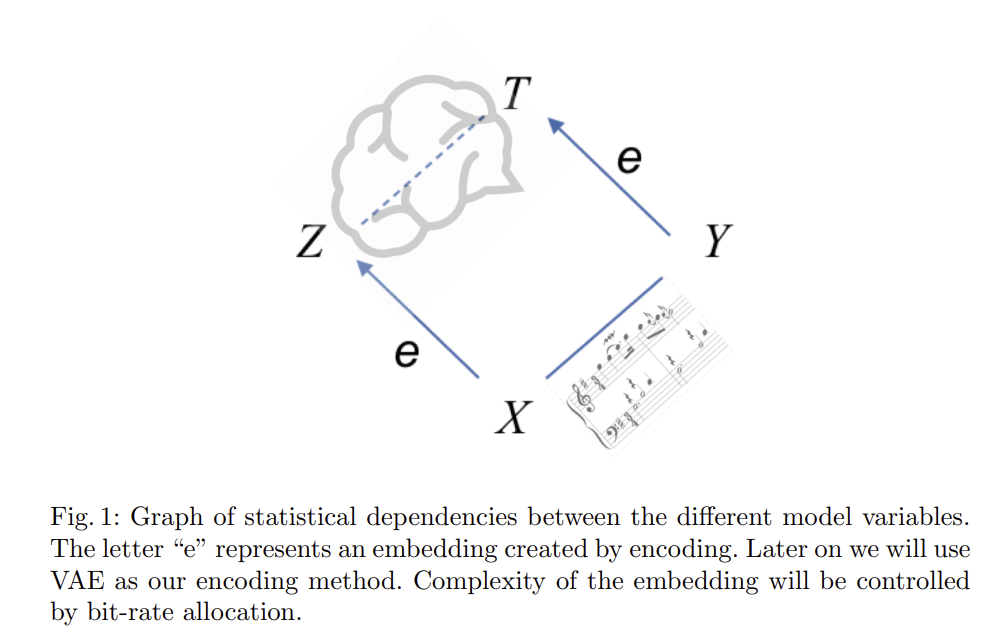

Deep Music Information Dynamics

Shlomo Dubnov, Ke Chen, Kevin Huang Journal of Creative Music Systems, 2022, 1 Read full publication. Abstract: Generative musical models often comprise of multiple levels of structure, presuming that the process of composition moves between background to foreground, or between generating musical surface and some deeper and reduced representation that governs hidden or latent dimensions […]

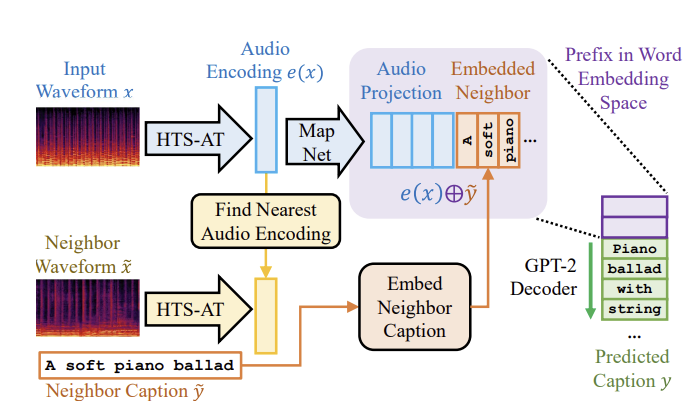

Retrieval Guided Music Captioning via Multimodal Prefixes

Nikita Srivatsan, Ke Chen, Shlomo Dubnov, Taylor Berg-Kirkpatrick Thirty-Third International Joint Conference on Artificial Intelligence {IJCAI-24}, Aug 2023, Jeju, South Korea. pp.7762-7770. Read full publication. Abstract: In this paper we put forward a new approach to music captioning, the task of automatically generating natural language descriptions for songs. These descriptions are useful both for categorization […]

Somax2 Workshop and Concert at Università di Pisa

Marco Fiorini has been invited to lead a workshop at Università di Pisa on 4 November 2024, showcasing REACH advancements in Music Improvisation with Co-Creative Agents. The workshop focused on Somax2, REACH co-creative environment for music improvisation, and saw a wide number of students from Università di Pisa interacting for the first time with it.A […]