Read original: arXiv:2409.12346 – Published 20/09/2024 by Tornike Karchkhadze, Mohammad Rasool Izadi, Shlomo Dubnov.

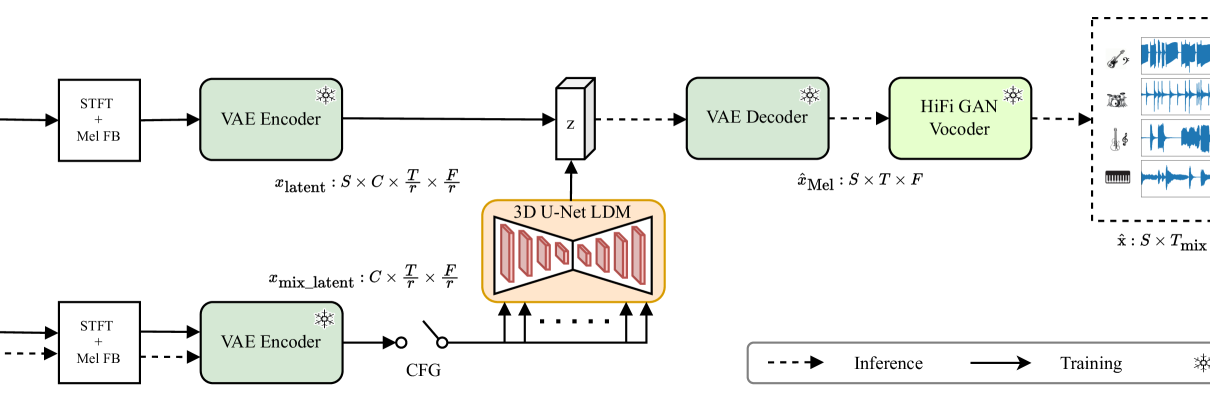

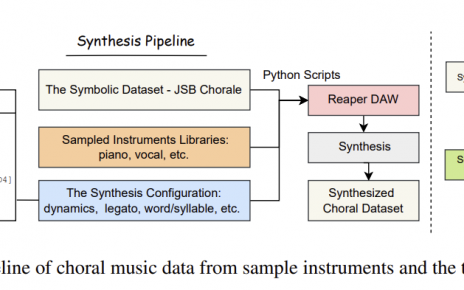

Abstract: Diffusion models have recently shown strong potential in both music generation and music source separation tasks. Although in early stages, a trend is emerging towards integrating these tasks into a single framework, as both involve generating musically aligned parts and can be seen as facets of the same generative process. In this work, we introduce a latent diffusion-based multi-track generation model capable of both source separation and multi-track music synthesis by learning the joint probability distribution of tracks sharing a musical context. Our model also enables arrangement generation by creating any subset of tracks given the others. We trained our model on the Slakh2100 dataset, compared it with an existing simultaneous generation and separation model, and observed significant improvements across objective metrics for source separation, music, and arrangement generation tasks. Sound examples are available at this https URL.