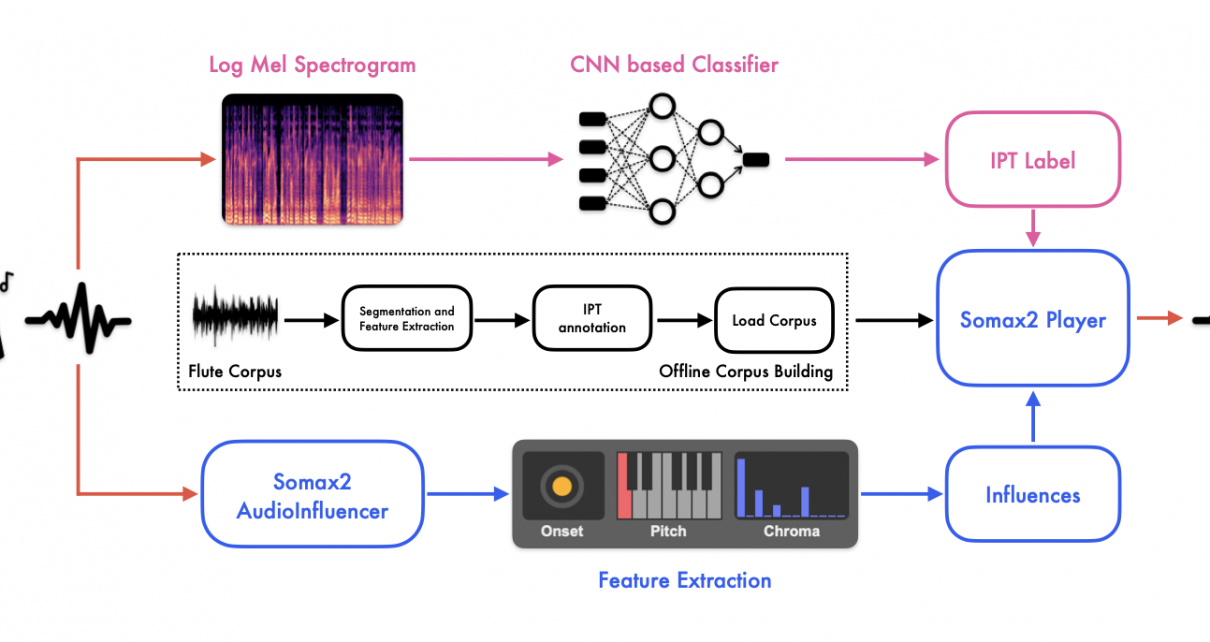

Article by Nicolas Brochec*, Marco Fiorini*, Mikhail Malt and Gérard Assayag (* equal contribution) has been accepted for the 50th International Computer Music Conference (ICMC) in Boston, United States. Read the full paper Video demo Abstract: This paper presents a significant advancement in the co- creative capabilities of Somax2 through the integration of real-time recognition […]

Research

ICMC 2025 in Boston : A REACH show !

ICMC 2025 has seen an impressive series of Reach related events, such as the opening concert where the Reach Team performed with Somax2, SoVo (Somax2 /+ George Lewis’ Voyager) along legendary musicians Roscoe Mitchell, Steve Lehman and George Lewis. The next day there was an evening concert entirely devoted to Somax2 (see program below), and […]

Somax2 version 2.7 is out!

This version introduces Max 9 compatibility, a redesigned user interface, multi-label corpus building, and new label handling for filtering and real-time control. Max 9 Compatibility Somax2 now runs on Max 9.0.3 or later (earlier versions of Max 9 contained a bug in groove~ and buffer~ that caused issues with corpus loading). Updated User Interface Somax2’s interface has been redesigned and updated […]

Elez-Boulaine : Homage to Pierre Boulez in Portrait Of The Artist In The Age Of AI

ERC COSMOS is pleased to partner with ERC REACH in a demonstration-performance to set the stage for a panel discussion and a performance on AI’s impact on art creation, at the Night of Ideas at the Institut Français du Royaume-Uni (17 Queensberry Place London SW7 2DT)on 6 February at 19h20-20h10 in Les Salons. The event is free and open to the public with registration. […]

The Application of Somax2 in the Live-Electronics Design of Roberto Victório’s Chronos IIIc

William Teixeira, Marco Fiorini, Mikhail Malt, Gérard Assayag. The Application of Somax2 in the Live-Electronics Design of Roberto Victório’s Chronos IIIc. Musica Hodie, 2024, 24, ⟨10.5216/mh.v24.78611⟩. ⟨hal-04760169⟩ Wililam Teixeira : » Excited to share one of the big research achievements I could be part of. It was just published in Musica Hodie the article concerning « The Application of […]

Somax2 in Prague

Marco Fiorini is giving two Somax2 workshop in Prague (CZ), on 12 December 2024 at Kampus Hybernská and on 13 December 2024 at Punctum, followed by a concert featuring Michal Wróblewski on sax, and Jonas Gerigk on doublebass.The trio will present works from their album Paragliding, published in 2023 for Ma Records. Paragliding by Gerigk […]

Workshop et Master Class Somax2 au Conservatoire de Strasbourg

Master Class Somax2 au Conservatoire de Strasbourg par Gérard Assayag, Marco Fiorini Mikhail Malt et Georges Bloch de l’IRCAM à l’invitation de Tom Mays du 4 au 6 décembre. https://www.hear.fr/agenda/somax2/ Les étudiants et étudiantes ont pu découvrir Somax2, le prendre en main, apprendre à jouer avec, composer avec, enregistrer des corpus, avec un concert de […]