Research

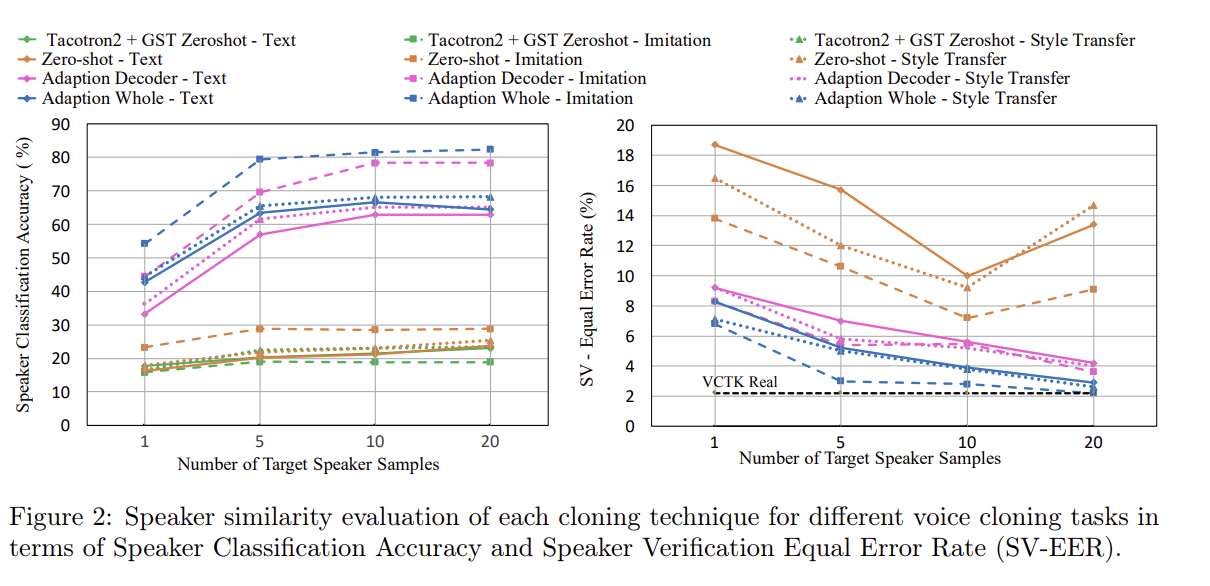

Expressive Neural Voice Cloning

By Paarth Neekhara, Shehzeen Hussain, Shlomo Dubnov, Farinaz Koushanfar, Julian McAuley Sat, 30 Jan 2021 Read full publication Abstract: Voice cloning is the task of learning to synthesize the voice of an unseen speaker from a few samples. While current voice cloning methods achieve promising results in Text-to-Speech (TTS) synthesis for a new voice, these […]

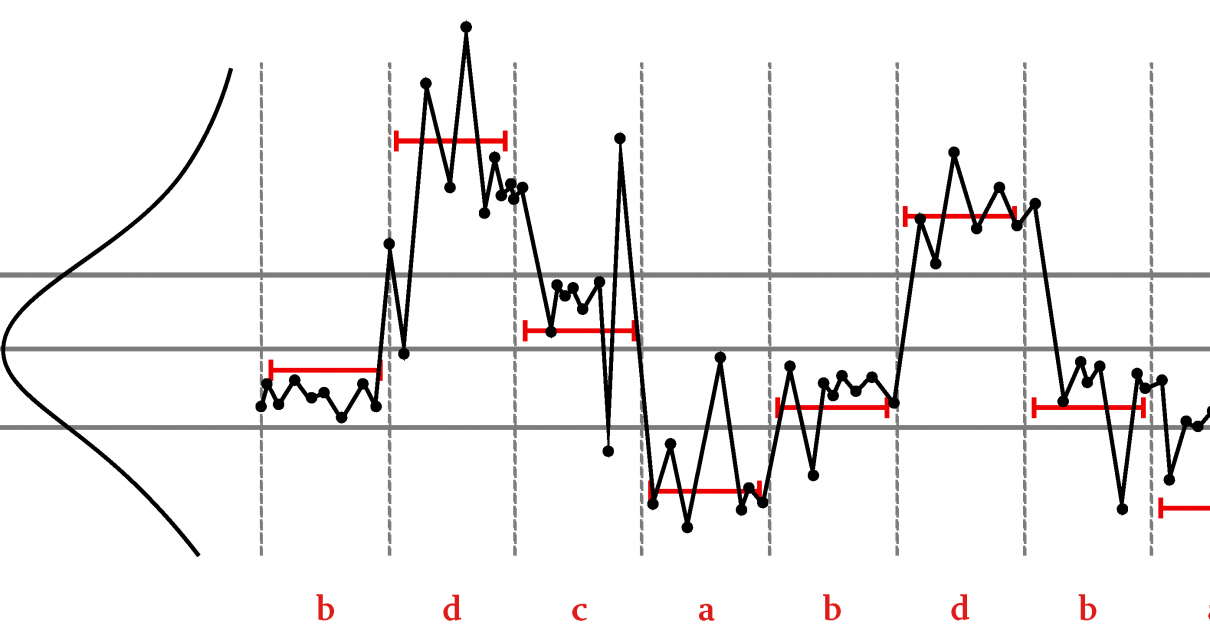

Predictive Quantization and Symbolic Dynamics

Dubnov, S. Predictive Quantization and Symbolic Dynamics. Algorithms 2022, 15, 484. https://doi.org/10.3390/a15120484 Read full article Abstract: Capturing long-term statistics of signals and time series is important for modeling recurrent phenomena, especially when such recurrences are a-periodic and can be characterized by the approximate repetition of variable length motifs, such as patterns in human gestures and trends in […]

Co-Creativity and AI Ethics

By Vignesh Gokul. Computer Science [cs]. University of California San Diego, 2024. English. Read full publication. Abstract: With the development of intelligent chatbots, humans have found a method to communicate with artificial digital assistants. However, human beings are able to communicate an enormous amount of information without ever saying a word, eg gestures and music. […]

Somax2 presented at Notam Online Max Meetup

On Monday 25 November, at 19:00 (GMT +1) Marco Fiorini will lead an Online Max Meetup for Notam, the Norwegian centre for technology, art and music. The session will present Somax2 and discuss Music Improvisation and Composition with Co-Creative Agents, showcasing concrete research and artistic outcomes of the REACH project to the online Max community. […]

REACHing OUT! Strikes Back !

ven 15 au dim 17 novembre,Athénor – Le LiFE – St Nazaire Après le festival Manifeste au Centre Georges Pompidou en juin 2023, le festival Propagations du GMEM à la friche de la Belle de Mai à Marseilles en mai 2024, Le festival Improtech à Tokyo an août 2024, REACHing OUT! revient pour le festival […]

Generative AI for Music and Audio

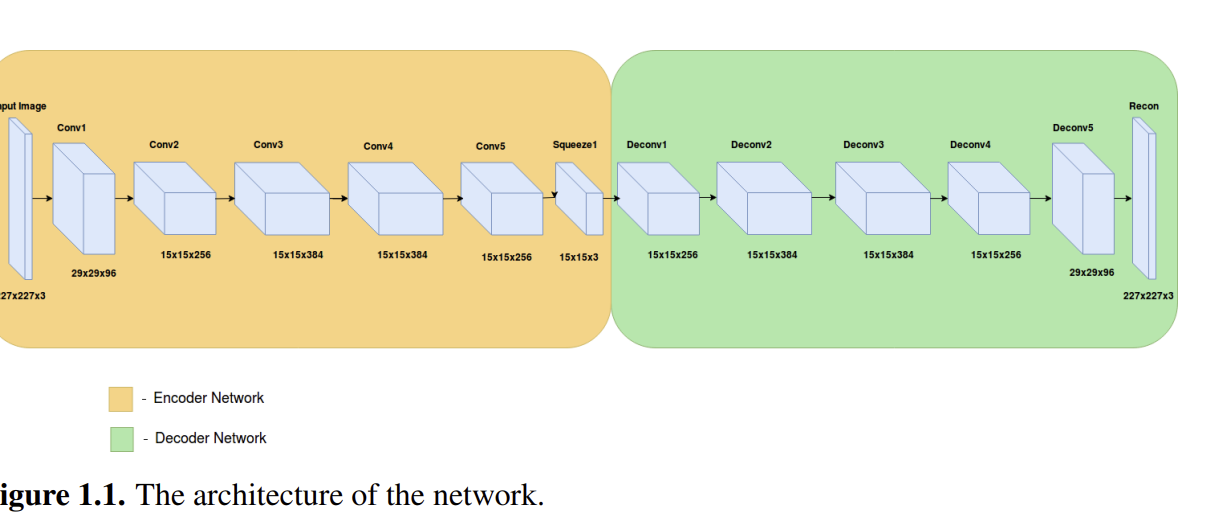

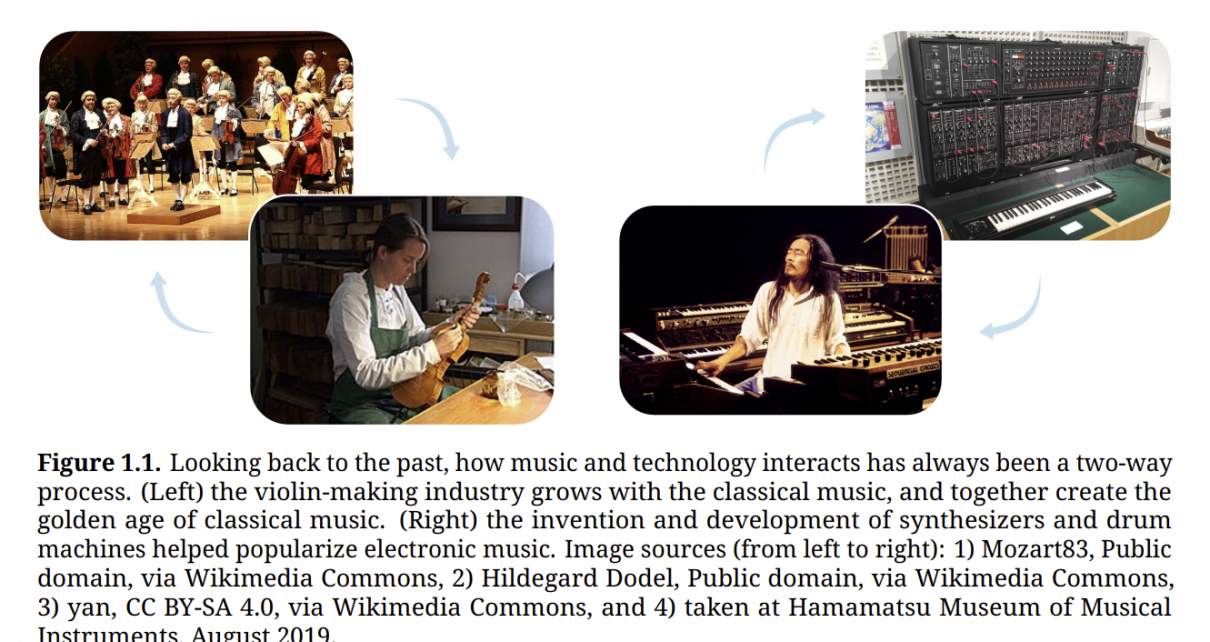

By Hao-Wen Dong. Sound [cs.SD]. University of California San Diego, 2024. English. Read full publication. Abstract: Generative AI has been transforming the way we interact with technology and consume content. In the next decade, AI technology will reshape how we create audio content in various media, including music, theater, films, games, podcasts, and short videos. […]

Cyber-improvisations et cocréativité, quand le jazz joue avec les machines

Gérard Assayag, Marc Chemillier, Bernard Lubat 2023, pp.38-39. Read full article. Abstract: Pouvez-vous nous raconter votre rencontre et votre envie de travailler ensemble ? Marc Chemillier : Avec Gérard Assayag, nous avons commencé à concevoir des logiciels pour faire de l’improvisation musicale au début des années 2000. Gérard travaillait sur la simulation stylistique et explorait […]

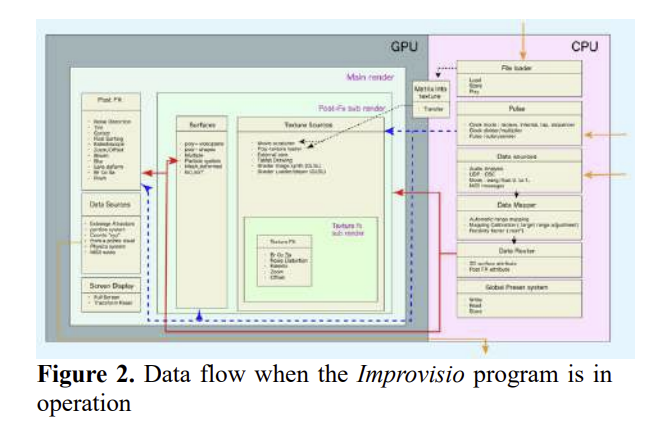

Improvisio : towards a visual music improvisation tool for musicians in a cyber-human co-creation context

BySabina Covarrubias Stms. Journées d’informatique musicale, Micael Antunes; Jonathan Bell; Javier Elipe Gimeno; Mylène Gioffredo; Charles de Paiva Santana; Vincent Tiffon, May 2024, Marseille, France. Read full publication. Abstract: Improvisio is a software for musicians who want to improvise visual music. Its development is part of the REACH project. It is useful to create visual […]