Article by Alberto Gatti and Marco Fiorini (equal contribution) has been accepted for the Sound and Music Computing Conference (SMC2025) in Graz, Austria. Read the full paper Video demo Abstract: This paper presents a novel methodology for creating dynamic auditory landscapes in co-creative musical interaction through the Somax2 system, introducing a new approach to spatial […]

Publications

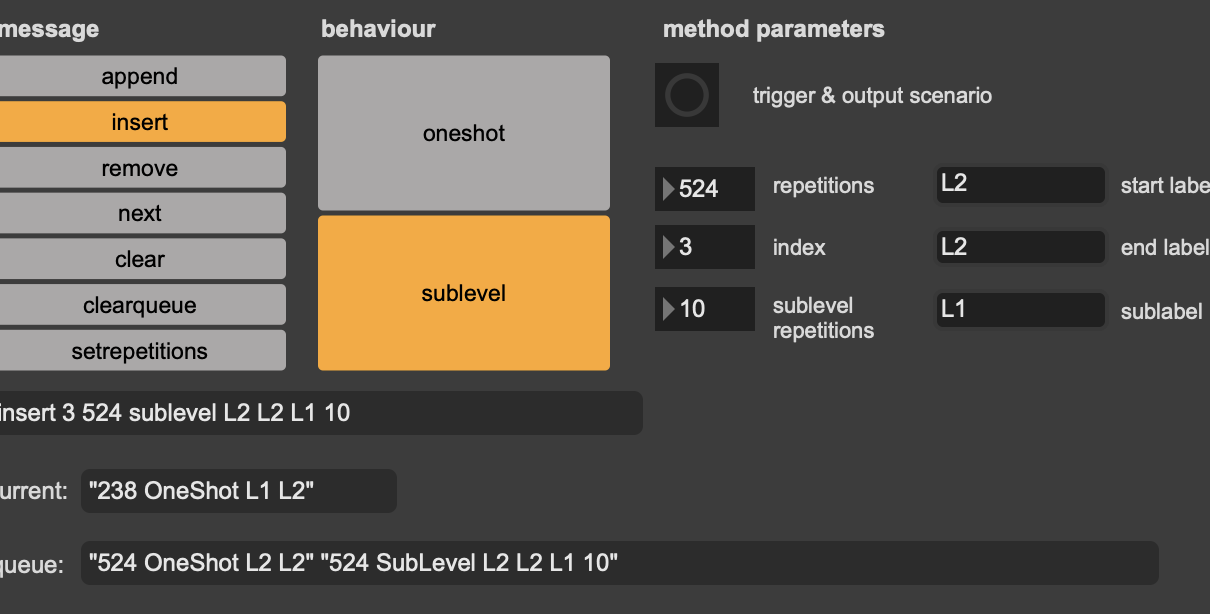

A First Implementation of Somax2 Behaviours Using MORFOS Form, Segmentation and Organisation

Article by Joséphine Calandra, Marco Fiorini and Gérard Assayag has been accepted for the Journées de l’Informatique Musicale (JIM2025) in Lyon, France. Read the article Abstract: Somax2 is a multi-agent improvisation software based on a probabilistic recombination model of an audio or MIDI corpus, annotated according to several musical dimensions, and sensitive to influences of the external […]

Introducing EG-IPT and ipt~: a novel electric guitar dataset and a new Max/MSP object for real-time classification of instrumental playing techniques

Article by Marco Fiorini*, Nicolas Brochec*, Joakim Borg and Riccardo Pasini (* equal contribution) has been accepted for the New Interfaces for Musical Expression (NIME) Conference in Canberra, Australia. Read the full paper Video demo Abstract: This paper presents two key contributions to the real-time classi- fication of Instrumental Playing Techniques (IPTs) in the context […]

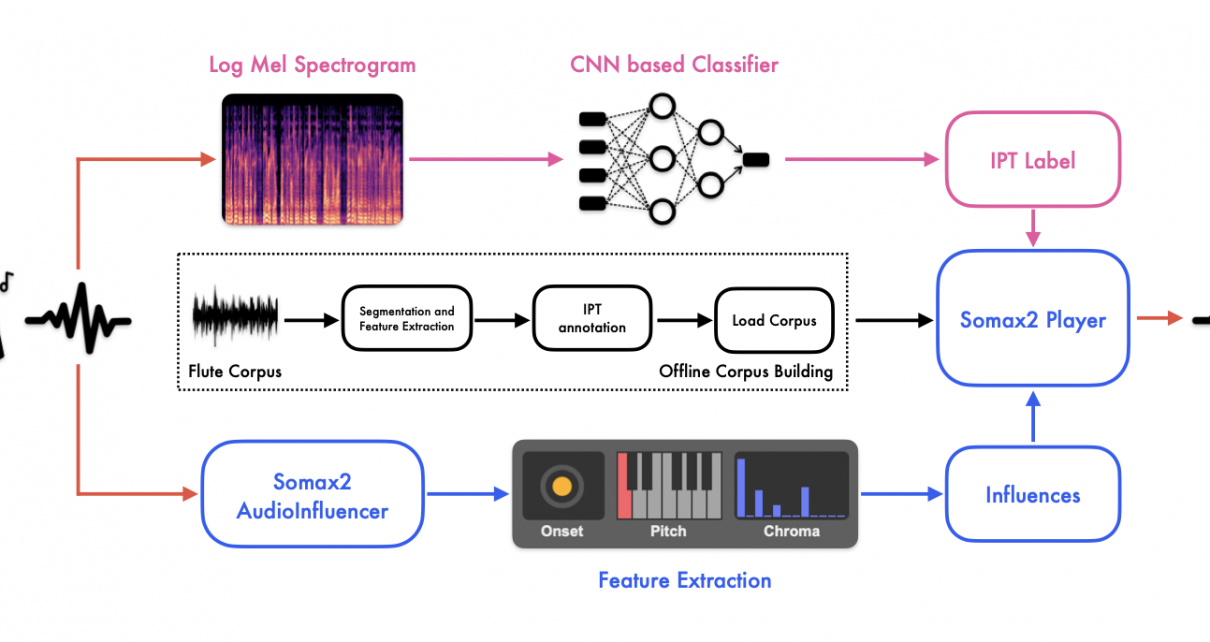

Interactive Music Co-Creation with an Instrumental Technique-Aware System: A Case Study with Flute and Somax2

Article by Nicolas Brochec*, Marco Fiorini*, Mikhail Malt and Gérard Assayag (* equal contribution) has been accepted for the 50th International Computer Music Conference (ICMC) in Boston, United States. Read the full paper Video demo Abstract: This paper presents a significant advancement in the co- creative capabilities of Somax2 through the integration of real-time recognition […]

The Application of Somax2 in the Live-Electronics Design of Roberto Victório’s Chronos IIIc

William Teixeira, Marco Fiorini, Mikhail Malt, Gérard Assayag. The Application of Somax2 in the Live-Electronics Design of Roberto Victório’s Chronos IIIc. Musica Hodie, 2024, 24, ⟨10.5216/mh.v24.78611⟩. ⟨hal-04760169⟩ Wililam Teixeira : » Excited to share one of the big research achievements I could be part of. It was just published in Musica Hodie the article concerning « The Application of […]

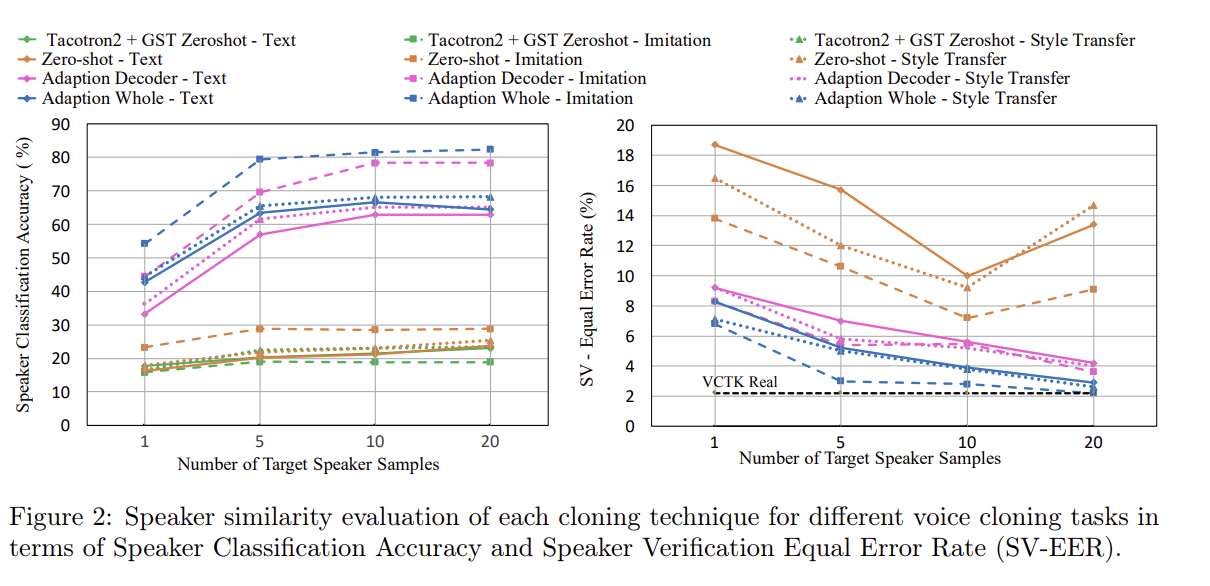

Expressive Neural Voice Cloning

By Paarth Neekhara, Shehzeen Hussain, Shlomo Dubnov, Farinaz Koushanfar, Julian McAuley Sat, 30 Jan 2021 Read full publication Abstract: Voice cloning is the task of learning to synthesize the voice of an unseen speaker from a few samples. While current voice cloning methods achieve promising results in Text-to-Speech (TTS) synthesis for a new voice, these […]

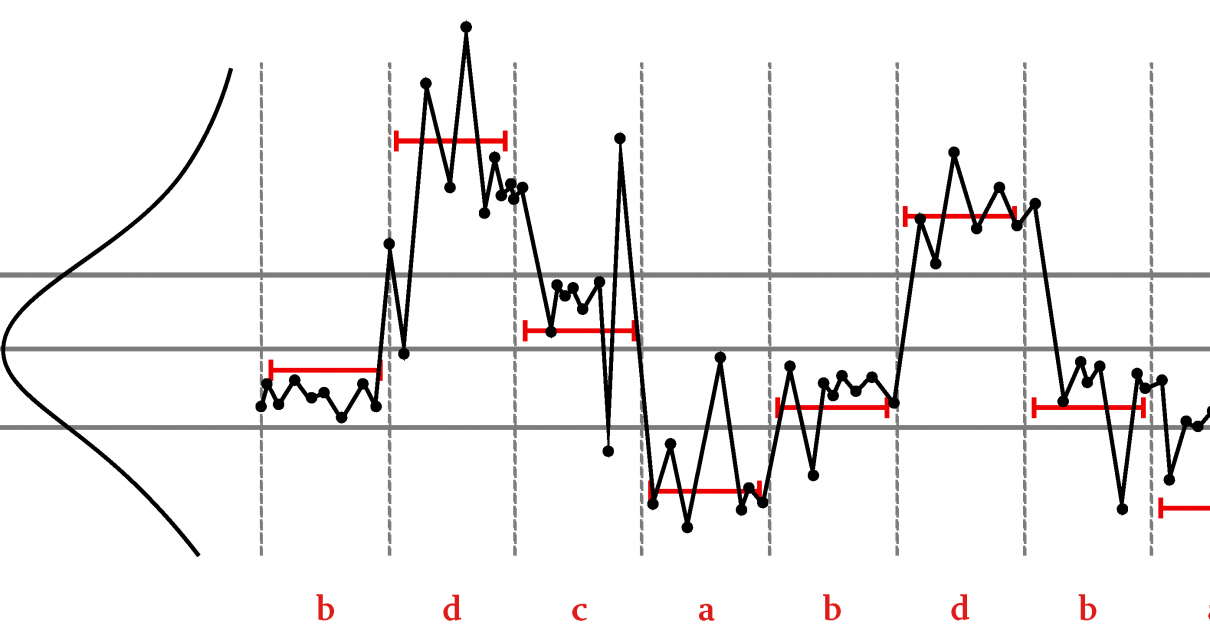

Predictive Quantization and Symbolic Dynamics

Dubnov, S. Predictive Quantization and Symbolic Dynamics. Algorithms 2022, 15, 484. https://doi.org/10.3390/a15120484 Read full article Abstract: Capturing long-term statistics of signals and time series is important for modeling recurrent phenomena, especially when such recurrences are a-periodic and can be characterized by the approximate repetition of variable length motifs, such as patterns in human gestures and trends in […]

Co-Creativity and AI Ethics

By Vignesh Gokul. Computer Science [cs]. University of California San Diego, 2024. English. Read full publication. Abstract: With the development of intelligent chatbots, humans have found a method to communicate with artificial digital assistants. However, human beings are able to communicate an enormous amount of information without ever saying a word, eg gestures and music. […]

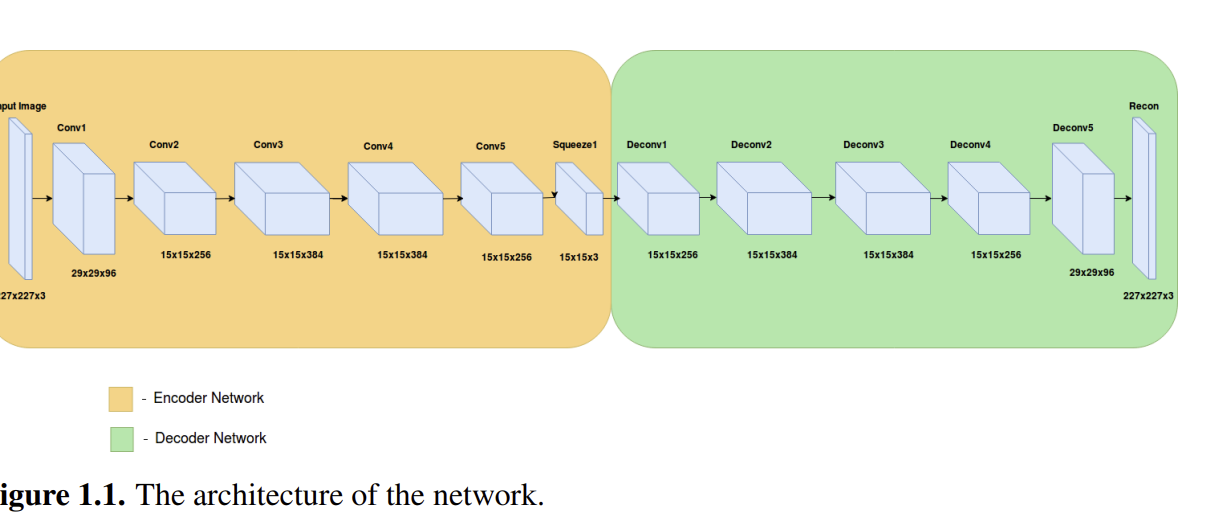

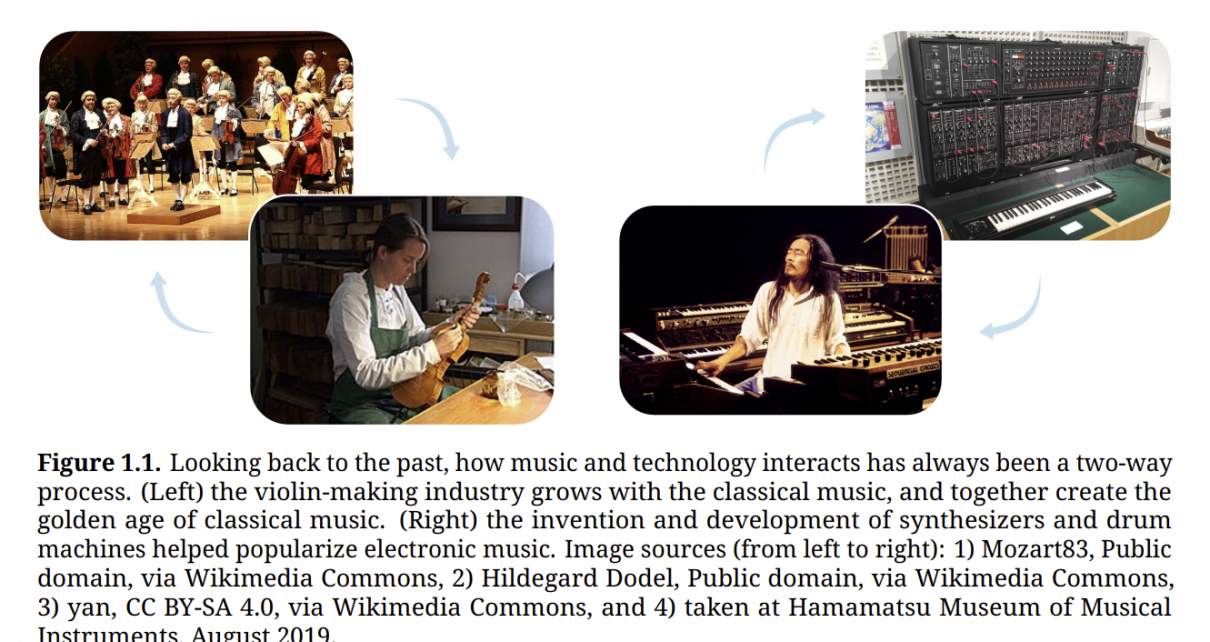

Generative AI for Music and Audio

By Hao-Wen Dong. Sound [cs.SD]. University of California San Diego, 2024. English. Read full publication. Abstract: Generative AI has been transforming the way we interact with technology and consume content. In the next decade, AI technology will reshape how we create audio content in various media, including music, theater, films, games, podcasts, and short videos. […]

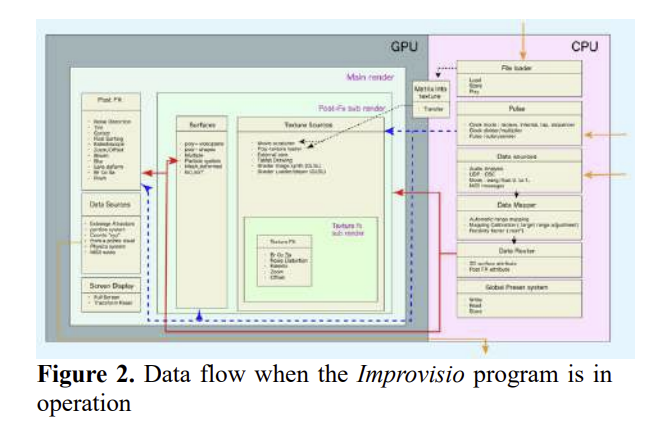

Improvisio : towards a visual music improvisation tool for musicians in a cyber-human co-creation context

BySabina Covarrubias Stms. Journées d’informatique musicale, Micael Antunes; Jonathan Bell; Javier Elipe Gimeno; Mylène Gioffredo; Charles de Paiva Santana; Vincent Tiffon, May 2024, Marseille, France. Read full publication. Abstract: Improvisio is a software for musicians who want to improvise visual music. Its development is part of the REACH project. It is useful to create visual […]